How Semantic Understanding and ToF Point Clouds Improve Robot Navigation

(2026年01月09日)How Semantic Understanding and ToF Point Cloud Perception Enhance Mobile Robot Navigation

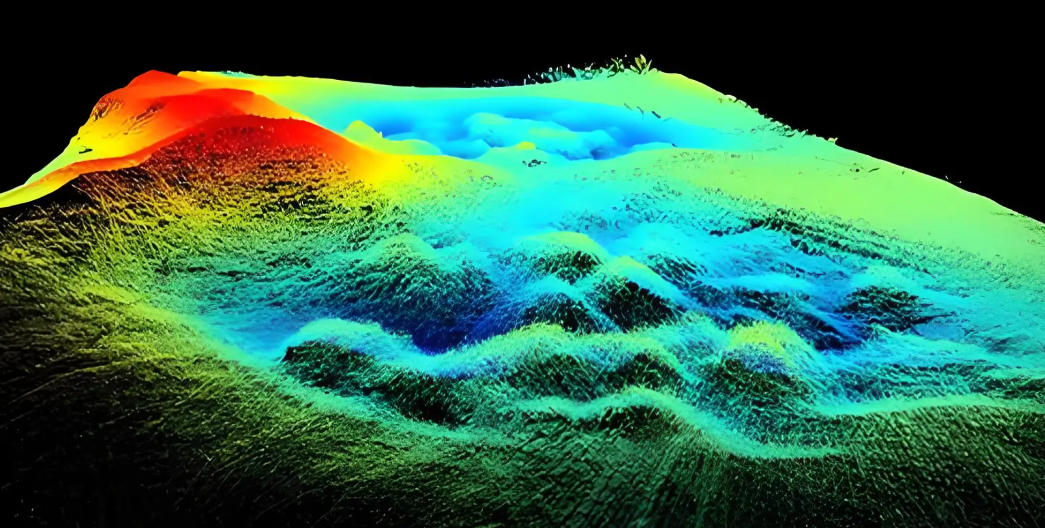

With the rapid evolution of mobile robot navigation, autonomous driving systems, and smart warehouse automation, traditional perception methods based solely on geometry or vision are no longer sufficient. Today, the integration of Time-of-Flight (ToF) point cloud sensing and semantic understanding has become a core technology for enabling robots to perceive, understand, and intelligently interact with complex environments.

By combining high-precision 3D point cloud perception with AI-based semantic analysis, mobile robots can achieve accurate localization, intelligent obstacle avoidance, and autonomous decision-making. This technology stack is now widely adopted in industrial automation, autonomous mobile robots (AMR), AGV systems, service robots, logistics robots, and autonomous vehicles.

1. What Is a Time-of-Flight (ToF) Sensor?

A Time-of-Flight (ToF) sensor is a depth-sensing device that calculates distance by measuring the time it takes for emitted light to travel to an object and return to the sensor. Using this principle, ToF sensors generate high-resolution depth maps and 3D point cloud data in real time.

ToF depth cameras are essential components in modern robotic perception systems, supporting 3D environment modeling, SLAM, collision avoidance, and intelligent navigation, even in challenging lighting conditions.

Working Principle of ToF Sensors

The sensor actively emits infrared or laser light pulses

Light reflects off object surfaces

The reflected signal returns to the sensor

The round-trip flight time is measured

Distance is calculated using the speed of light

Multiple measurements are fused into a depth map or 3D point cloud

Because ToF sensors do not rely on ambient light or surface texture, they perform reliably in low-light, low-texture, or high-reflectivity environments.

2. Fundamentals of Point Cloud Detection in Mobile Robotics

In autonomous navigation systems, self-driving vehicles, and intelligent industrial robots, point cloud detection is the foundation of environmental perception.

2.1 What Is Point Cloud Detection?

Point cloud detection refers to the process of capturing three-dimensional spatial data using ToF sensors, LiDAR, or RGB-D cameras, where each point represents a position on an object’s surface in 3D space.

Millions of such points form a digital 3D representation of the environment, enabling robots to understand spatial structure and geometry.

2.2 Key Functions of Point Cloud Perception

Obstacle Detection and Collision Avoidance

Detects static and dynamic obstacles such as walls, shelves, machinery, pedestrians, and vehicles

Supports real-time path replanning and dynamic obstacle avoidance algorithms

Improves operational safety in crowded and complex environments

3D Mapping and Localization (SLAM)

Provides essential input for 3D SLAM (Simultaneous Localization and Mapping)

Enables robots to build accurate maps for long-term autonomous navigation

Widely used in warehouses, factories, hospitals, and outdoor facilities

Dynamic Environment Monitoring

Tracks object movement and scene changes

Supports logistics monitoring, inventory management, and industrial inspection

Enhances efficiency and situational awareness

2.3 Advantages of ToF Sensors for Point Cloud Generation

Millimeter-level depth accuracy for precise navigation

Low latency and high frame rate, ideal for real-time systems

Lower data volume compared to traditional LiDAR, reducing processing load

Indoor and outdoor adaptability, suitable for diverse applications

These advantages make ToF sensors highly suitable for AMR navigation, AGV systems, service robots, and inspection robots.

3. Limitations of Geometry-Only Point Cloud Perception

Despite their strengths, point clouds alone have inherent limitations when used without semantic interpretation.

3.1 Lack of Semantic Meaning

Point clouds describe shape and position but not object identity

Robots cannot distinguish functional differences (e.g., door vs. wall, person vs. pillar)

Navigation decisions remain purely geometric and less intelligent

3.2 Environmental Sensitivity

Outdoor conditions such as rain, fog, snow, or strong sunlight may introduce noise

Highly reflective or transparent surfaces affect depth accuracy

3.3 High Computational Complexity

Dense point clouds require filtering, segmentation, and registration

Real-time performance often demands GPU acceleration or edge AI computing

3.4 Need for Multi-Sensor Fusion

Single-sensor perception is insufficient for complex scenarios

Fusion with RGB cameras, IMU, GPS, and radar significantly improves robustness

4. Role of Semantic Understanding in Intelligent Navigation

4.1 What Is Semantic Understanding?

Semantic understanding uses deep learning, computer vision, and 3D point cloud semantic segmentation to enable robots to identify, classify, and interpret objects and scenes.

It allows robots not only to detect obstacles, but also to understand what they are and how to interact with them.

4.2 Core Capabilities of Semantic Perception

3D Object Recognition and Semantic Segmentation

Classifies floors, walls, doors, stairs, shelves, people, and vehicles

Enables 3D object detection and instance segmentation

Traversable Area and Scene Understanding

Distinguishes navigable paths from non-traversable regions

Differentiates static structures from moving objects

Improves path planning efficiency and safety

Intelligent Task Planning and Decision-Making

Builds semantic maps for high-level planning

Supports autonomous task execution and multi-robot collaboration

5. Benefits of Integrating ToF Sensors with Semantic Understanding

5.1 Accurate 3D Semantic Perception

ToF sensors provide precise spatial geometry, while semantic algorithms assign meaning to the data—creating a complete 3D semantic perception system.

5.2 Robust Performance in Challenging Lighting

ToF depth sensing works in darkness or extreme lighting

Enhances reliability in warehouses, underground spaces, and night-time operations

5.3 Dynamic Object Tracking and Prediction

Identifies pedestrians, vehicles, and moving robots

Predicts motion trajectories for safer navigation

5.4 Multi-Sensor Semantic SLAM

Combines ToF, RGB cameras, and IMU

Improves localization accuracy and map consistency

6. Application Scenarios

Intelligent Warehouse Robots

Shelf modeling using ToF point clouds

Semantic recognition of goods and storage areas

Automated picking and optimized route planning

Service Robots

Navigation in malls, hotels, hospitals, and airports

Human detection and crowd-aware path planning

Enhanced human–robot interaction

Autonomous Vehicles and Outdoor Robots

Detection of pedestrians, vehicles, and road structures

Supports autonomous driving perception pipelines

Industrial Inspection Robots

Equipment recognition and anomaly detection

Safe navigation in complex industrial environments

7. Technology Trends and Future Directions

Continued advancement in ToF-based 3D semantic segmentation

AI accelerators and edge computing for real-time perception

Shared semantic maps for multi-robot collaboration

Unified indoor–outdoor navigation architectures

Conclusion

By integrating ToF point cloud perception with semantic understanding, mobile robots gain the ability to accurately map their environment, recognize objects, and make intelligent navigation decisions in dynamic and complex scenarios.

This powerful combination is becoming a cornerstone technology for autonomous driving, smart warehouses, industrial robots, and service robotics, enabling safer, more efficient, and more intelligent autonomous systems.

Synexens 3D Of RGBD ToF Depth Sensor_CS30

SHOP NOWhttps://tofsensors.com/collections/time-of-flight-sensor/products/rgbd-3d-camera

After-sales Support:

Our professional technical team specializing in 3D camera ranging is ready to assist you at any time. Whether you encounter any issues with your TOF camera after purchase or need clarification on TOF technology, feel free to contact us anytime. We are committed to providing high-quality technical after-sales service and user experience, ensuring your peace of mind in both shopping and using our products.

- このできごとのURL:

コメント